Big Tech cloud computing architecture is struggling to meet the demands of modern mobile AI applications. This new wave of modern dynamic applications requires sub-50ms latency to perform, yet 70% of cloud-based processing exceeds this threshold. Centralized architectures, such as AWS, continue to provide a vital service and can be a good option for training large models; however, their inability to deliver instantaneous responses that modern AI applications demand is fueling the rapid adoption of mobile edge computing (MEC).

MEC providers can process data locally and, when interconnected with 5G networks, can deliver latency reductions of up to 80% compared to traditional cloud infrastructure. With IoT devices alone projected to generate over 90 zettabytes of data by the end of 2025, and the market for mobile edge computing expected to grow at a rate of 13.8%, reaching nearly $380 billion by 2028, the mobile application industry is witnessing a fundamental shift in how modern applications are executed.

Understanding Mobile Edge Computing Architecture

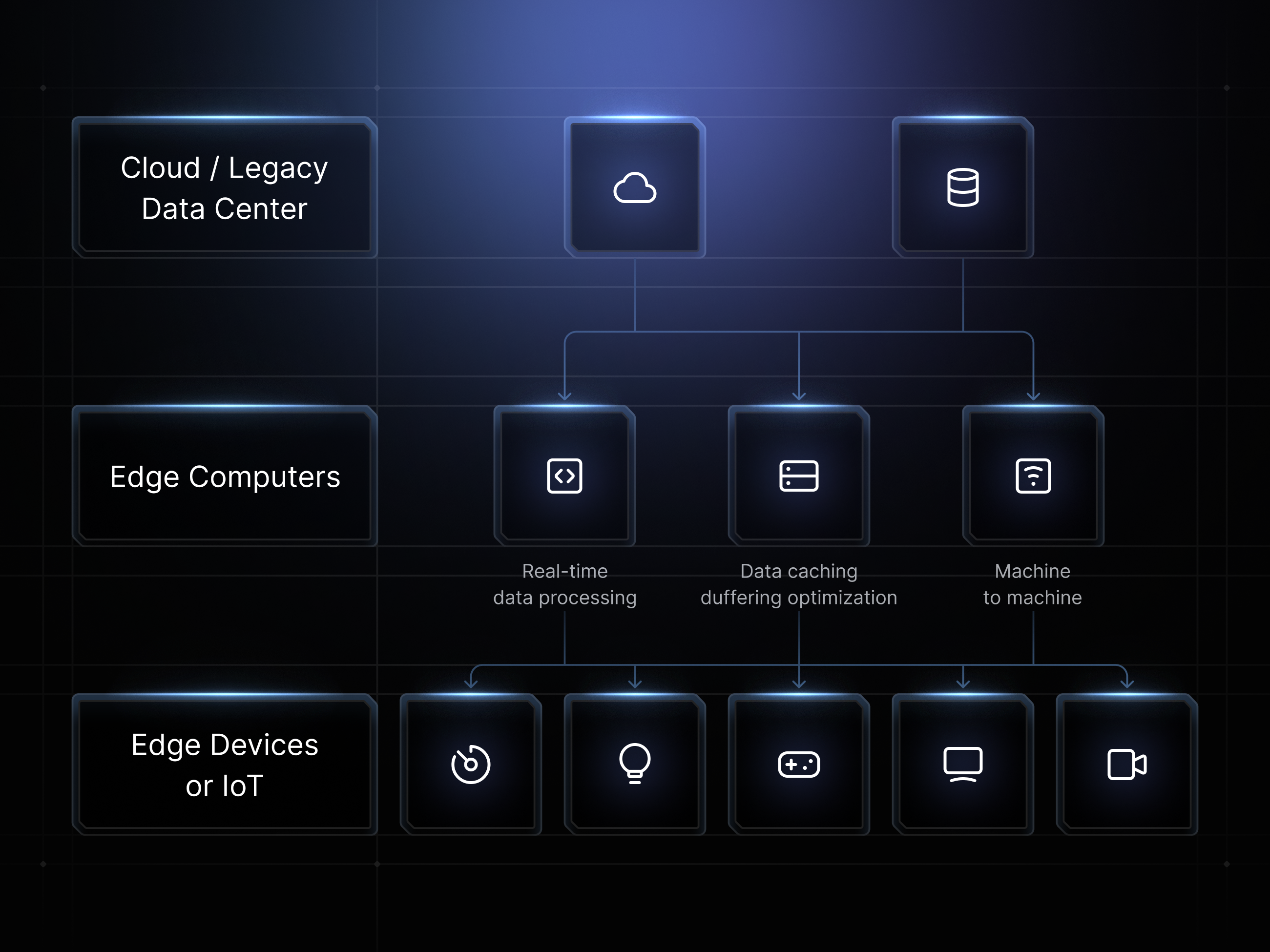

Understanding that MEC is not just a newer, faster form of cloud computing, but rather a paradigm shift in how and where computation occurs in applications, is key to realizing its full potential. Edge computing creates a distributed framework of processing on the edge of mobile networks by positioning processing power within or adjacent to base stations. This distributed framework establishes a hierarchy of tiered processing nodes that can strategically place data and minimize the distance that it must travel. MEC networks typically consist of three distinct tiers:

- Edge Devices: Smartphones and IoT sensors

- Edge Servers: Micro data centers at cell towers

- Regional Cloud Infrastructure

By reducing the distance that data must travel to be processed, mobile edge computing creates several distinct advantages over its cloud competitors that are essential for AI/ML mobile applications.

- Reduced Latency: MEC processes data within 10-50 kilometers compared to hundreds of miles away in cloud providers.

- Bandwidth Optimization: Most processing occurs locally, and only essential data is sent to centralized clouds, reducing network congestion

- Improved Security / Privacy: Users' most sensitive data can be processed locally without leaving the edge.

- Increased Reliability: Applications continue to function as usual without requiring continuous cloud connectivity.

Accelerating Mobile Edge Computing with 5G

Running parallel to the advancement of MEC is the continued expansion of 5G networks. 5G networks can deliver network speeds more than twenty times faster than LTE. At the same time, MEC brings the compute execution closer to the end user, reducing the overall latency of the network. The convergence of the two technologies addresses four pain points that dynamic mobile AI/ML applications have been encountering:

- High Latency: Significantly faster network speeds and lower latency allow for 1ms responses for critical applications

- Device Connectivity: Connects thousands of IoT devices per square kilometer for increased local data flow.

- Network Slicing: Creates dedicated virtual networks that are resilient to cloud outages and other disruptions, allowing for uninterrupted service.

- Edge Orchestration: Enables dynamic workloads based on real-time demand.

Mobile Edge Computing AI/ML Applications

It is important to recognize that the advancement of MEC does not necessarily entail the complete elimination of cloud processing. For most projects and applications, a hybrid model that leverages edge processing for real-time decisions, while utilizing cloud connectivity for model updates and complex analytics, will likely be the best approach. Understanding this dynamic for AI/ML applications is key. Edge computing for AI/ML applications on mobile thrives in environments like:

Real-Time Inference Applications

Edge AI hardware, such as the NVIDIA Jetson AGX Orin, provides the compute infrastructure necessary for computationally demanding applications, including autonomous robots, drones, and self-driving vehicles, to succeed. Mobile applications enhanced by MEC can perform object detection, facial recognition, and natural language processing with minimal latency while preserving user privacy through local processing.

Mobile AI Agents

Localized mobile AI agents that require dynamic response times, particularly benefit from the advancement of mobile edge computing. Lightweight models, such as Yi, Phi, and Llama3, can achieve generation throughput of 5 to 12 tokens per second with less than 50% CPU and RAM usage on edge devices.

Implementation Strategies for Edge AI Deployment

Successfully deploying AI workloads through MEC requires careful consideration of model optimization, hardware constraints, and network orchestration. Understanding these dynamics can help developers better leverage MEC.

Model optimization techniques:

Frameworks like Google’s LiteRT (formerly TensorFlow Lite) offer lightweight alternatives specifically designed for mobile and edge devices, emphasizing memory efficiency and fast execution times.

Hardware Selection

When choosing between specialized AI accelerators, such as Google's Edge TPU, and general-purpose processors, the decision depends on specific application requirements and power constraints. Developers should clearly understand their applications' demands before hardware selection takes place.

Network Orchestration

Kubernetes-based solutions, such as K3s, offer lightweight container orchestration specifically designed for edge deployments, enabling automatic scaling and seamless integration with existing cloud infrastructure.

Emerging Edge Computing Narratives

As the pace of innovation accelerates and more AI/ML mobile applications come online, mobile edge computing will play an increasingly leading role in local data processing. Some narratives to pay close attention to are:

- Neuromorphic Computing: Designing hardware and software that simulate the neural and synaptic structures and functions of the brain to process information. This market is set to grow from $1.44 billion in 2024 to an expected $4.12 billion by 2029.

- Decentralized GPU Networks: DePIN platforms, such as io.net, can provide a full suite of decentralized edge processing applications that developers can tap into. They can enable dynamic resource allocation, open GPU marketplaces, and cost-effective scaling for AI/ML workloads. For example, io.cloud is the programmable infrastructure layer of io.net, providing developers and enterprises with access to decentralized GPU clusters that offer the scale, speed, and control necessary to train, deploy, and run real AI workloads.

The Future of Distributed Mobile Edge Computing

Mobile edge computing represents more than a simple network upgrade for an existing centralized system. It is helping lay the infrastructure foundation for the future of mobile-based data processing. Its ultimate success will not be determined by replacing cloud-based processing but by acting harmoniously alongside it in hybrid models. Cloud will still have its place in larger AI/ML processing functions, but for the immediate dynamic intake and processing of real-time data, MEC opens new opportunities for teams building in the space.

Its convergence with the advancement of 5G networks is helping establish the next generation of AI applications where sophisticated machine learning capabilities can operate seamlessly across millions of edge devices.

.png)

.svg)

.png)