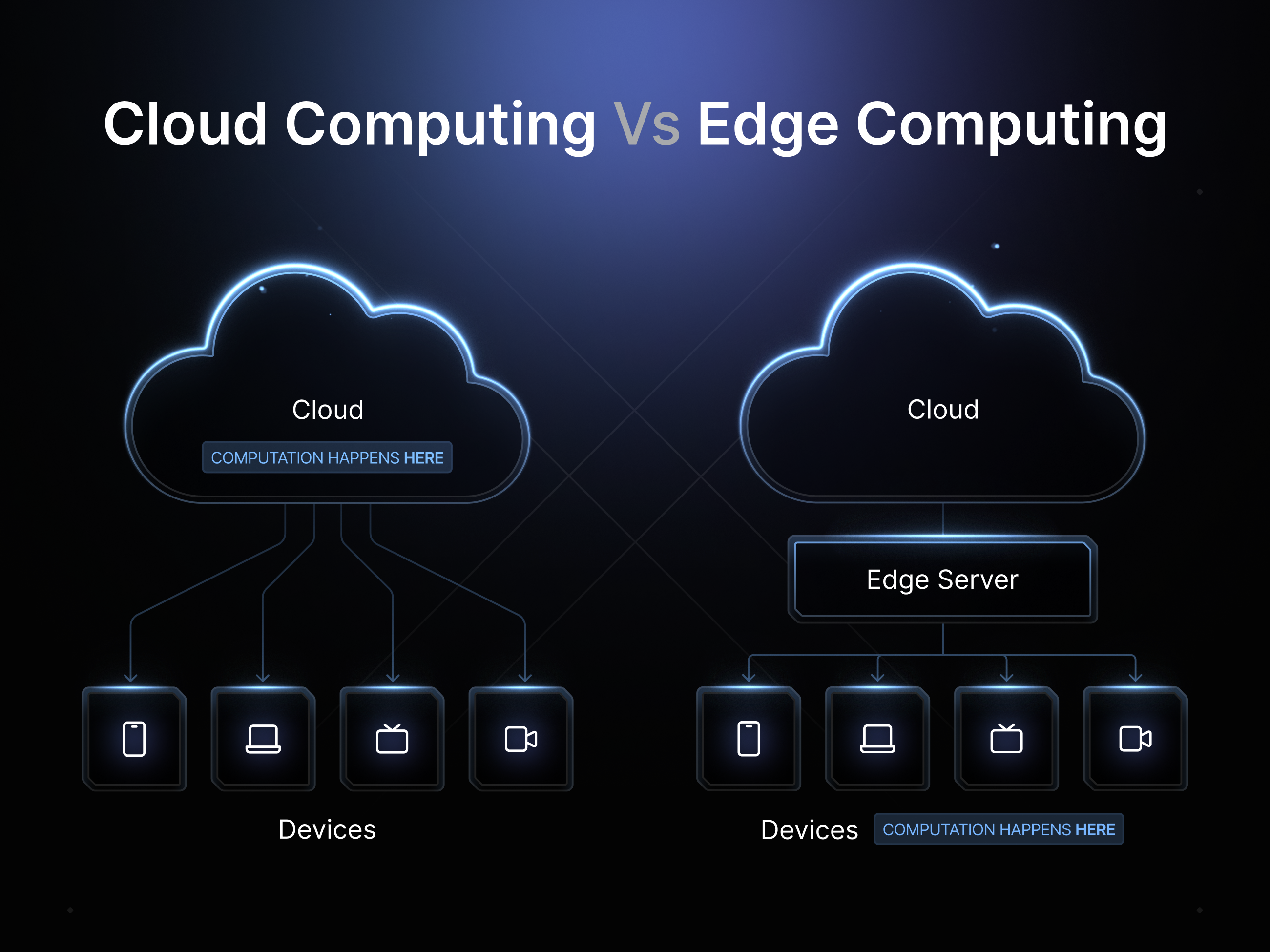

Edge computing processes data near its source, reducing latency, bandwidth usage, and improving real-time performance. Cloud computing is centralized and gives you on-demand access to compute from remote data centers.

Gartner predicts that 75% of enterprise data will be processed at the edge by 2025, up from just 10% in 2018, and Stratis Research is predicting that the global edge computing market will expand from $38.32 billion in 2024 to $1,065.63 billion by 2033.

Yet most organizations still struggle when trying to choose between cloud vs edge computing and traditional cloud computing infrastructure.

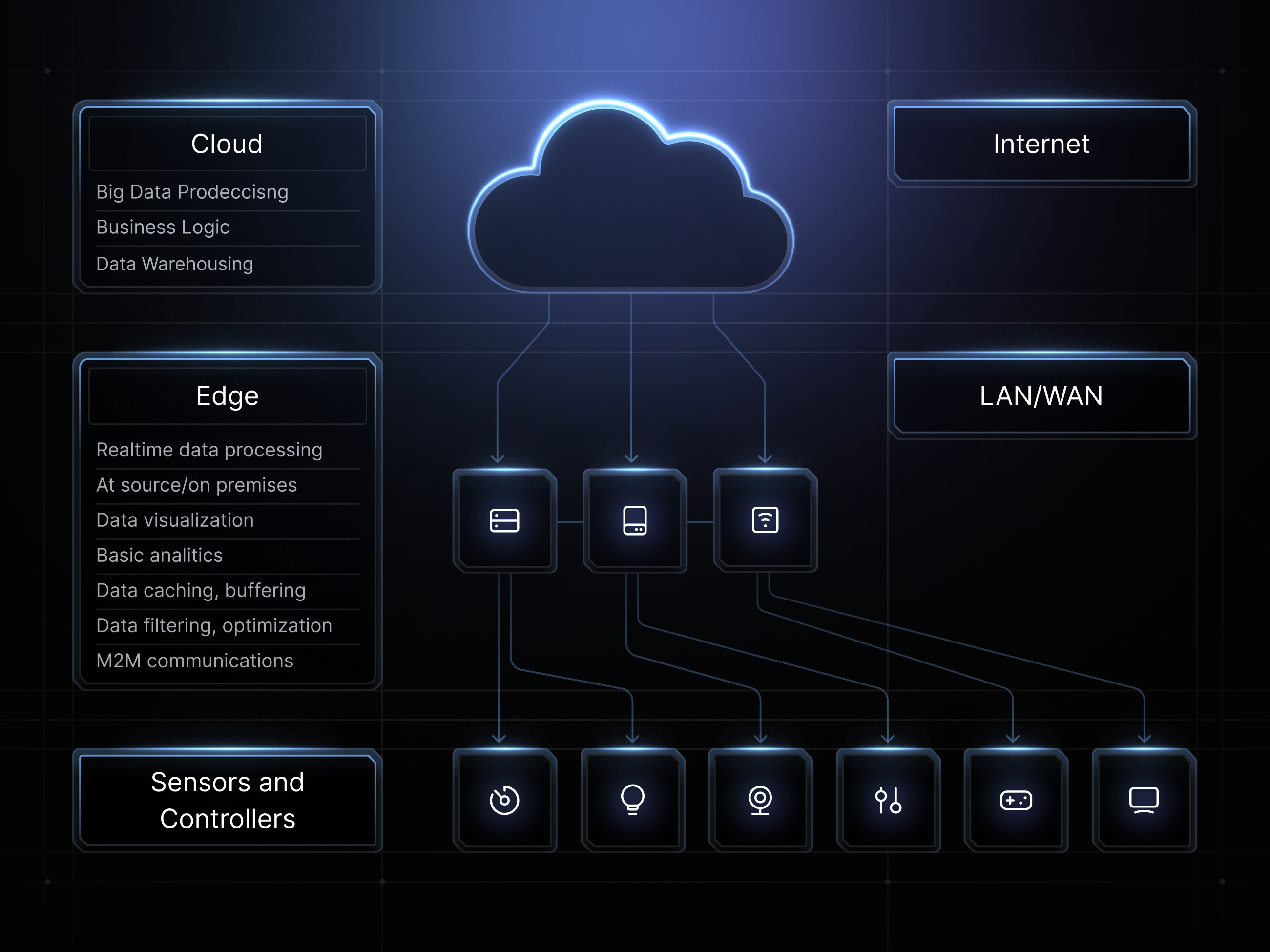

The question of cloud vs edge computing is less about competing technologies and more about how to integrate the two as complementary components into your tech stack. In this article, we will unpack each models' unique infrastructure and how it works so that you can better understand how and where to apply each in your tech stack.

Computing Fundamentals

Cloud computing is great for cost-conscious processing that has massive or global scaling requirements. Edge computing on the other hand is great for local or offline systems that need super low latency or strong privacy.

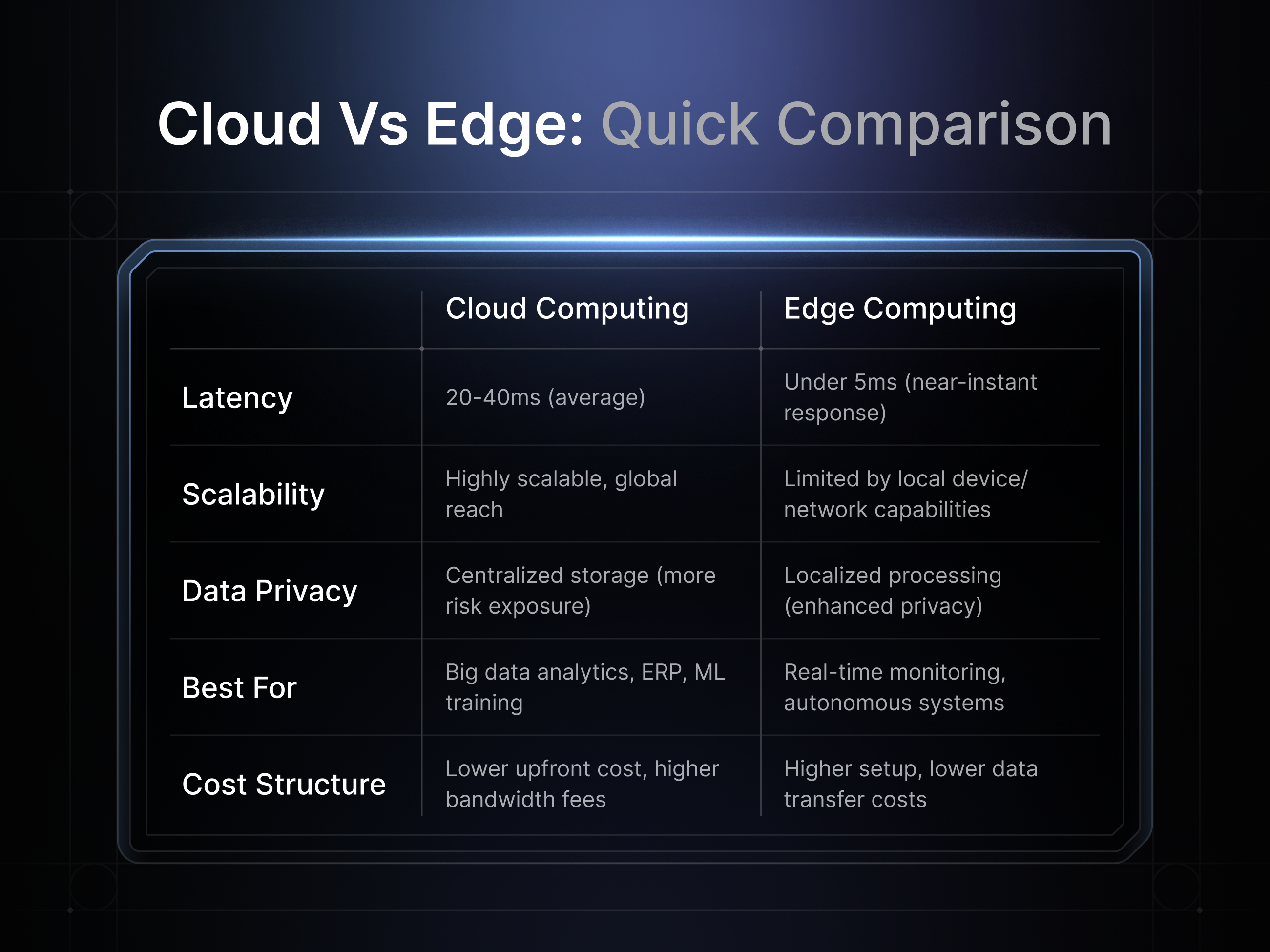

Cloud computing's centralized model provides virtually unlimited storage and processing power, making it ideal for applications like data analytics, machine learning model training, and enterprise resource planning. Whereas edge computing benefits from the localized processing of data to achieve a significantly lower latency of 5ms compared to the cloud's 20-40ms average. Edge computing's low latency and reduced bandwidth demands make it ideal for applications that demand real-time responses, like autonomous vehicles that require split-second decisions of 4000+ TOPS processing power.

Cost & Performance Considerations

The cost analysis when examining the two models is not as simplistic as directly comparing the financial cost of operations. For instance, cloud computing offers a lower initial operational cost, while edge computing reduces ongoing bandwidth expenses.

When you dive into the performance benchmarks, there are clear benefits to each. Edge computing has superior latency performance and maintains functionality during network disruptions, but cloud computing excels in providing superior scalability and processing power. Understanding what your project requirements demand can help you decide between the two and where to apply them.

It is also important to think about resource allocation when opting for edge computing. Scale too quickly, and your budgets can balloon fast. Fortunately, innovations in distributed edge computing resource management software have made it easier to track and deploy edge computing. They are a leading factor as to why the edge space is growing at a pace of 23.7% CAGR.

Industry-Specific Use Cases & Implementation

Healthcare applications are a prime example of an industry that is adopting a hybrid model for compute architecture. Cloud computing enables the collection, sharing, and analysis of long-term trends in global populations. At the same time, edge computing devices facilitate direct remote diagnostics and telemedicine, improving access to healthcare in underserved areas while maintaining user record privacy.

In manufacturing, edge computing is used within IoT-powered sensors and robotics, as well as within their safety and security systems that require instant responses for emergency shutdowns. While also leveraging cloud computing's data analysis and machine learning capabilities for use in predictive machine maintenance, supply chain management analytics, and production optimization.

Autonomous vehicles are demonstrating how a hybrid split architecture can operate harmoniously. Tesla processes immediate driving decisions at the edge while leveraging cloud-based fleet learning to improve overall system performance. Edge computing improves the overall product's emergency response times while allowing cloud systems to analyze large volumes of data for long-term urban planning and route optimization.

Hybrid Approaches & Strategic Planning

With 75 billion connected devices expected in 2025, exploring edge vs cloud and hybrid cloud edge computing models is becoming essential for any company managing distributed data. The Industrial Internet of Things (IIoT) has driven the explosive growth of edge computing integration in recent years through helping organizations in digitizing their facilities.

However, rather than moving entirely away from cloud infrastructure or being forced to choose between cloud and edge infrastructure, the companies that are driving innovation are the ones implementing complementary hybrid systems that optimize data flow between local processing and centralized analytics. The most successful of these organizations understand the benefits and trade-offs of each model and where best to implement them in their tech stack to have the most impact. Hybrid models are especially impactful in industries where real-time responsiveness and centralized insights must coexist, such as in autonomous systems, energy grids, and retail logistics.

For companies looking to integrate edge computing implementation into their stack, they should implement a phased migration plan from their current infrastructure, starting with simple use cases that offer clear edge benefits before transitioning to a more complex hybrid model. They should also consider future-proofing for the expansion of 5G networks and more intricate AI capabilities.

Success in this area doesn't depend on choosing the "right" technology but on how to implement the optimal combination of cloud and edge computing capabilities that align with your specific business objectives and technical requirements.

Key Takeaways

✅ Use edge computing when latency, data privacy, or offline operation is critical.

✅ Use cloud computing when massive scalability, storage, and processing power are required.

✅ Hybrid models allow you to leverage both strengths, starting small and expanding incrementally.

✅ Plan phased integration, beginning with use cases that have clear edge computing benefits.

✅ Future-proof with considerations for 5G expansion and emerging AI use cases.

.png)

.svg)

.png)